Understand TCP under poor network coverage

When we use our smartphone in a poorly covered network area, like most of the Parisian subway lines, the following scenario happens quite often: we try to load a given resource (web page, json, etc.) and get nothing but an endless loading animation. However, after several tries, the resource just suddenly appears.

We tried to understand why the resource was not loaded the first time despite a network that seemed functional. To do so we performed a low level study on TCP, the protocol used by most requests.

Concept

We developed a client/server system to compare TCP and UDP performances (meaning download rate, latency and loss rate).

Comparison

Measuring the TCP efficiency over the “subway’s 2G cellular network” communication channel comes down to comparing its performances with the best the channel can offer; this is why we used UDP. Indeed, as UDP does not have any flow control or data reliability, this is possible to evaluate the channel throughput by observing the packets transmision.

Measure

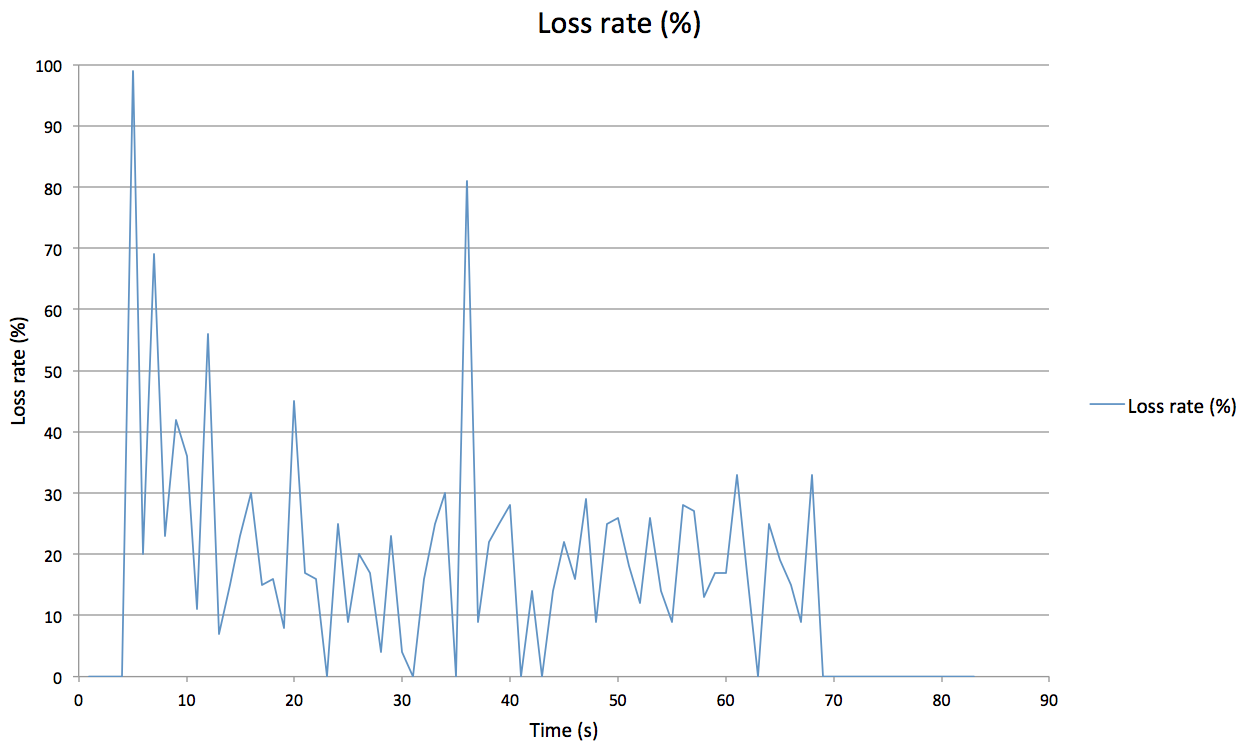

Using UDP, it is possible to get some information about the communication channel: as there is no flow control or data reliability, flooding the network with a higher rate than the channel can handle, gives us a measure of its download rate. Meanwhile, with a low rate, observing the received segment numbers let us know the loss rate. Moreover, with a received packet number loop between the client and the server, we can measure the latency. The same information is available at socket level for the TCP protocol.

The server is a simple program, written in C, using Berkeley sockets; while the client is an iOS application, also using Berkeley sockets.

Results

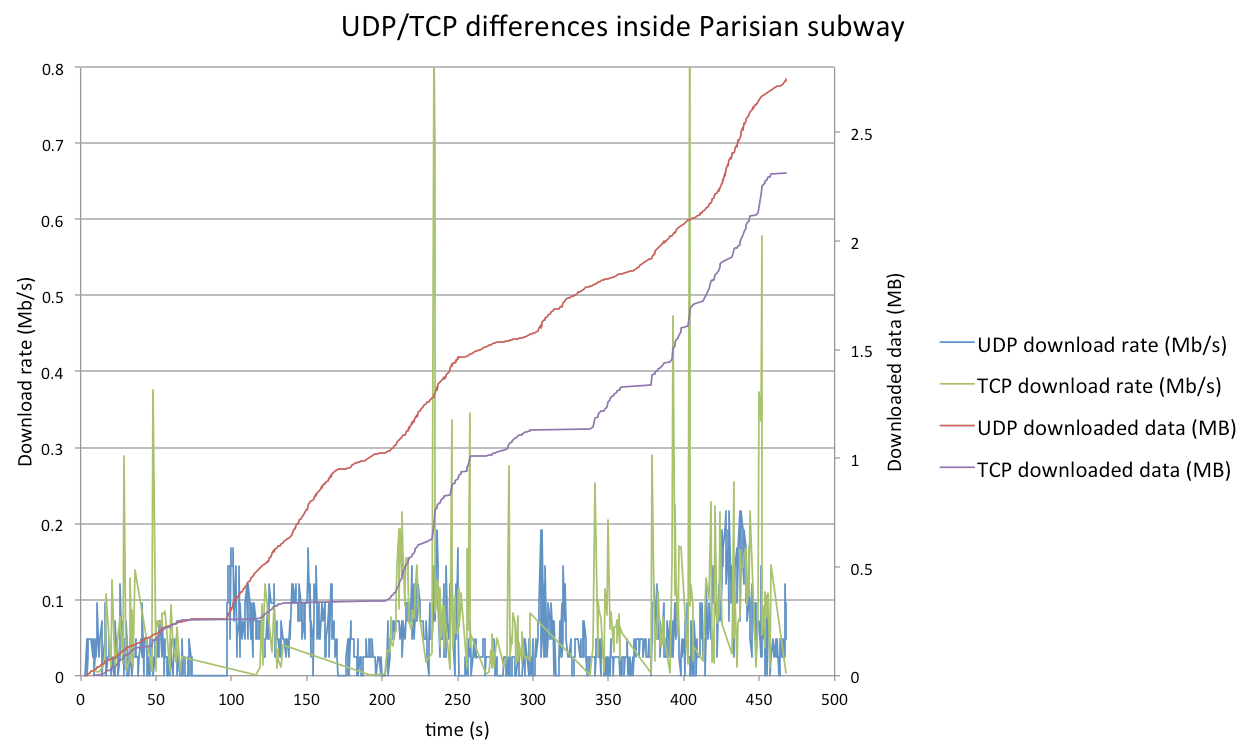

Thus we were able, with two identical iPhones, over the same cellular operator network, to make several measurements inside the Parisian subway, and then compare both protocol behaviors.

We made the following observations:

- TCP download follows the same trend than the UDP one, but it is slowed during times (which can last up to several minutes) when download simply stops.

- Latency is very variable; it goes from “classical” network values to tens of seconds.

- Loss rate is variable; it is regularly over 10% nay 20%.

Analysis

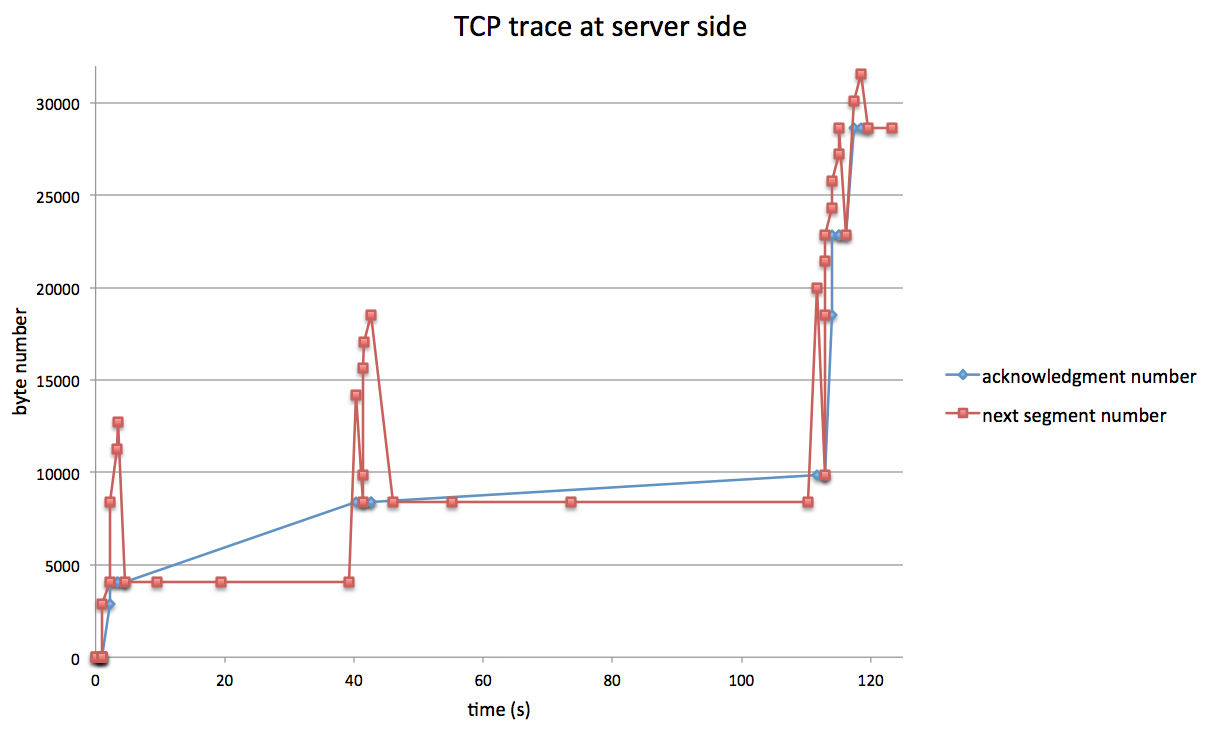

To understand those download stops, we have to dive into TCP mechanism. Packet loss detection can happen in two ways:

- Triple Acks : the server receives three acknowledgments from the recipient (the mobile) indicating the previous segment has not been received. The server just resends the missing segment while still sending next ones, overall the download is not perturbed.

- Timout : the server has not received any acknowledgment for a while (the timeout). It will stop sending any other packet but the missing one while it does not receive the given acknowledgment.

As, at times, the flow channel is very slow, the TCP sending window is too small to have a triple ack loss detection, the server may detects packet loss by timeout. Moreover, the timeout value is computed by TCP with the latency measurement, this is why, given the channel latency high variation, it is not relevant; and may generate unnecessary timeout loss detection.

We then made a more accurate measure, packet by packet with Wireshark and Tcpdump, during a no download time.

Here is the use case we are observing: in some cases, given the channel high loss rate, during retransmission, the packet is lost several times in a row. This causes, according to Karn algorithm, the segment retransmission with an exponentially increased delay, generating very long waiting times; and, when the server is “classically” configured it just ends the connection, leaving the mobile client in a waiting state.

We then customized and recompiled a Linux kernel version in which we removed, inside TCP implementation, the retransmission delay increase. This modification reduced the waiting delay, but the flow rate was still lower than UDP.

Conclusion

We used the UDP protocol simplicity to caracterize the communication channel in poor cell coverage areas. Then we measured the TCP efficiency over this channel. Our main observation is that the channel poorness, combined with the TCP flow control algorithm, results in no download times. This is the phenomenom we, generally, deal with when experimenting erratic download lockups in poorly covered areas.

TCP is quite efficient regarding the poor transmission channel quality. A large scale server side TCP update does not seem possible: this is a very specific problem happening in just some unpredictable cases; this is why it is difficult to challenge the protocol mechanism. A higher-level improvement solution, for example by request segmentation and replays seems more easily reachable.

We're hiring!

We're looking for bright people. Want to be part of the mobile revolution?

Open positions